Before anyone says anything. Yes, I know there are others – Theano for example – but this article is going to focus on the two big players in this space. If you have even dipped into any space of machine learning, not even necessarily deep learning or reinforcement learning, the chances are excruciatingly high that you have either heard of or used one of these two libraries.

So first, a little background on both.

PyTorch is an open source machine learning library primarily developed and maintained by Facebook’s AI lab whereas Tensorflow 2.0 (TF2) is another open source machine learning library, the second version of the popular original Tensorflow library, primarily developed and maintained by Google.

They are both extremely popular and widespread libraries used throughout the artificial intelligence world. But which is better? and for what?

I believe it boils down to personal preference and requirement.

Don’t get me wrong, there are plentiful objective reasons why each is superior but subjectivity plays the biggest role. Taking me personally as an example. I prefer to prototype and create my deep learning models using TF2 because I find the intuition and syntax for the library more suited to my preferences in creating neural networks.

In contrast when I am working on a reinforcement learning task I primarily utilise pytorch – simply because I personally find it easier to work with for RL and prefer its API.

There are no concrete reasons for why I prefer each language – it may be the way I learnt DL and RL considering that I did in fact start DL in TF2 and RL in pytorch, even though I had many restrictions when using pytorch due to my dislike of Facebook as a company.

But what is the difference?

Yes it is time that we get on to that.

Fundamentally both pytorch and TF2 share a lot of function and class names but there are key performance and utility differences which make one or the other objectively superior.

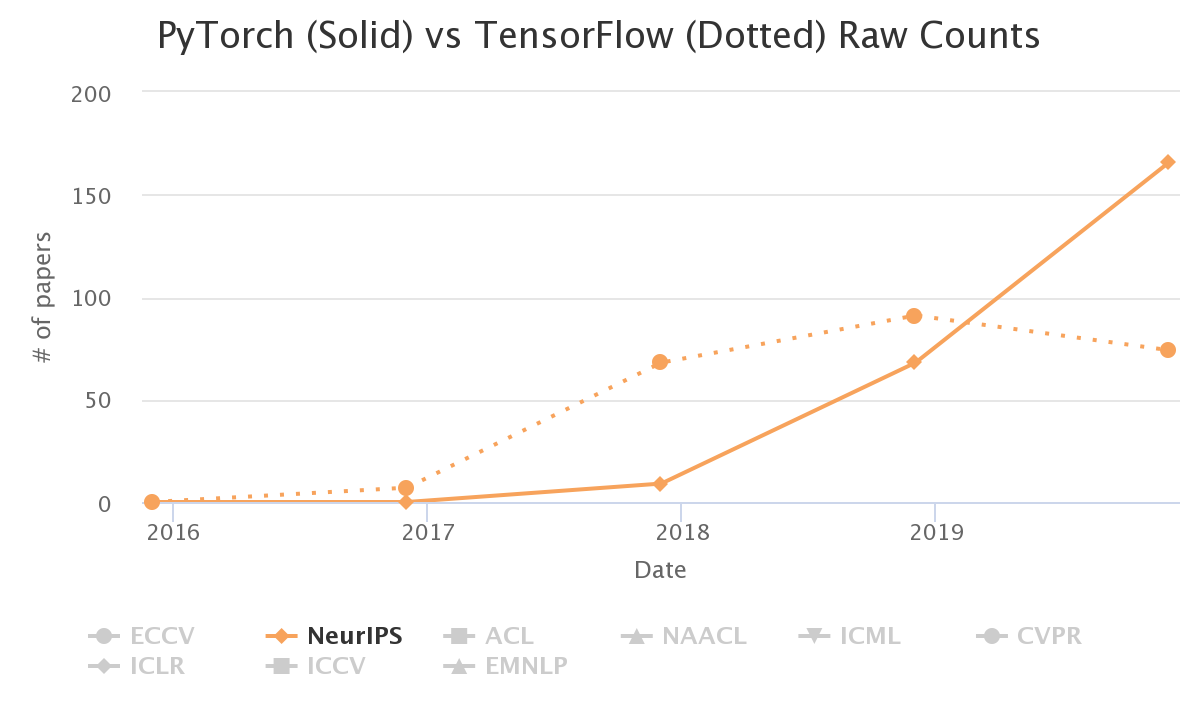

As shown on the graph above, in the recent years (beginning mid 2018) pytorch’s popularity has shot up past Tensorflow in terms of the research community. Due to this it is likely that many implementations, tutorials, courses, and even past algorithms are more likely to have pytorch implementations. This is a major objective benefit of pytorch over TF2, having a potentially growing community and exemplars online.

However, TF2 already has a huge community of supporters and followers who are extremely helpful and open to newcomers. This coupled with the fact that it is incredibly intuitive and easy to learn (perfect for beginners) makes it the undeniable best choice when starting off in machine learning.

TF2 also has Keras inbuilt. Keras is the top-tier API used for ML everywhere – and ever since the original creator and developer of Keras went to work on TF2, the Keras API module has come packaged inbuilt into TF2 making it extremely easy to use and implement.

TF2 is also built production-oriented meaning that its models do not require a python overhead for deployment, making it extremely useful for mobile and web deployments.

Both libraries also offer support for other programming languages aside from Python. Whereas pytorch has bindings for both C++ and Java which are arguably 2 of the most popular development languages, TF2 has it beat. With full support for C++, Java, Javascript, Go, and Swift as well as bindings for C#, Haskell, Julia, MATLAB, R, Ruby, Rust, and Scala TF2 has a much broader scope of application than pytorch.

Current Standings – PyTorch: 1, Tensorflow 2: 4

But here comes the big one.

Pytorch does not work on the concept of computation graphs (something I do not consider myself knowledgeable enough to explain) which makes it faster than TF2’s eager execution policy which attempts to mimic pythonic execution. I think that deserves more than one point.

Additionally, pytorch gives developers and researchers a greater level of control over their training process and the models in general. By pretty much forcing users to use subclassing to make neural networks and not including a prebuilt fit (train) function it allows researchers to tune even the finest hyperparameters at the cost of being a tad tedious and slow for prototyping. This is extremely important for paradigms such as RL where the hyperparameter sensitivity of the agent is ridiculously high.

On the contrary, TF2 has a prebuilt fit (training) function as well as the option to create your own. Additionally it has its Sequential (which is also in pytorch but does not work well) and Functional API which are simply phenomenal. Personally I prefer the functional API as it allows for the creation of non-linear/non-sequential neural networks like recommender systems and GAN’s and is still very easy and intuitive to use.

At this point you may be wondering why pytorch is gaining popularity if TF2 seems superior?

The answer is because of the speed and tuning. These are problems that are getting increasingly complex in artificial intelligence and therefore pytorch is the obvious choice. The requirement of needing to do that much more fine tuning and work in pytorch to create the same model allows a level of design creativity not easily achievable in TF2. Additionally it makes the model less of a black box and more of a “I know what’s going on inside my neural network” scenario – its very good for understanding, research, and especially debugging – which is why it is perfect for RL.

After doing my research and writing this article I think I am pretty clear on why I was drawn to each library as options for DL and RL for the same aforementioned reasons.

![Tensorflow vs. Pytorch] Become proficient in deep learning ...](https://theneuralnetworkblog.wordpress.com/wp-content/uploads/2020/06/d11f9-14yokbagxkdkoljqwaw_7oa.png)

One final point for TF2 is Tensorboard which allows you to graph and visualise your training process for all models and algorithms deployed. Although there are external libraries which make this possible in pytorch, the inbuilt nature of Tensorboard makes it very appealing.

What do you think? If you are an ML developer which library do you prefer and why? If you are considering going into ML which library will you choose?

There are lots of good options and answers to those questions so please do answer them in the comments. But one thing is clear. For beginners go with Tensorflow – and perhaps pivot to PyTorch later.